Alertmanager简介及机制

Alertmanager处理由例如Prometheus服务器等客户端发来的警报。它负责删除重复数据、分组,并将警报通过路由发送到正确的接收器,比如电子邮件、Slack等。Alertmanager还支持groups,silencing和警报抑制的机制。

分组

分组是指将同一类型的警报分类为单个通知。当许多系统同时宕机时,很有可能成百上千的警报会同时生成,这种机制特别有用。

抑制(Inhibition)

抑制是指当警报发出后,停止重复发送由此警报引发其他错误的警报的机制。(比如网络不可达,导致其他服务连接相关警报)

沉默(Silences)

Silences是一种简单的特定时间不告警的机制。

默认配置

打开Alertmanager的页面,选择status页面,可以查看到当前的Config。这是使用Helm安装prometheus-operator时默认的配置,如何修改呢?

global:

resolve_timeout: 4m

http_config: {}

smtp_hello: localhost

smtp_require_tls: true

pagerduty_url: https://events.pagerduty.com/v2/enqueue

hipchat_api_url: https://api.hipchat.com/

opsgenie_api_url: https://api.opsgenie.com/

wechat_api_url: https://qyapi.weixin.qq.com/cgi-bin/

victorops_api_url: https://alert.victorops.com/integrations/generic/20131114/alert/

route:

receiver: "null"

group_by:

- job

routes:

- receiver: "null"

match:

severity: info

group_wait: 30s

group_interval: 5m

repeat_interval: 12h

receivers:

- name: "null"

templates: []

Alertmanager配置说明

参考https://www.jianshu.com/p/239b145e2acc

修改默认配置

默认配置由同命名空间下Secret挂载到POD中,所以只要修改了这个Secret就可以修改默认配置了。

alertmanager-prometheus-operator-alertmanager内容主要是alertmanager.yaml 经过Base64加密的。可以通过命令查看

kubectl edit secret alertmanager-prometheus-operator-alertmanager -n monitoring

复制其中的alertmanager.yaml后面的字符串,然后使用命令查看原始值。

echo "Imdsb2JhbCI6IAogICJyZXNvbHZlX3RpbWVvdXQiOiAiNW0iCiJyZWNlaXZlcnMiOiAKLSAibmFtZSI6ICJudWxsIgoicm91dGUiOiAKICAiZ3JvdXBfYnkiOiAKICAtICJqb2IiCiAgImdyb3VwX2ludGVydmFsIjogIjVtIgogICJncm91cF93YWl0IjogIjMwcyIKICAicmVjZWl2ZXIiOiAibnVsbCIKICAicmVwZWF0X2ludGVydmFsIjogIjEyaCIKICAicm91dGVzIjogCiAgLSAibWF0Y2giOiAKICAgICAgImFsZXJ0bmFtZSI6ICJEZWFkTWFuc1N3aXRjaCIKICAgICJyZWNlaXZlciI6ICJudWxsIg==" | base64 -d

"global":

"resolve_timeout": "5m"

"receivers":

- "name": "null"

"route":

"group_by":

- "job"

"group_interval": "5m"

"group_wait": "30s"

"receiver": "null"

"repeat_interval": "12h"

"routes":

- "match":

"alertname": "DeadMansSwitch"

"receiver": "null"

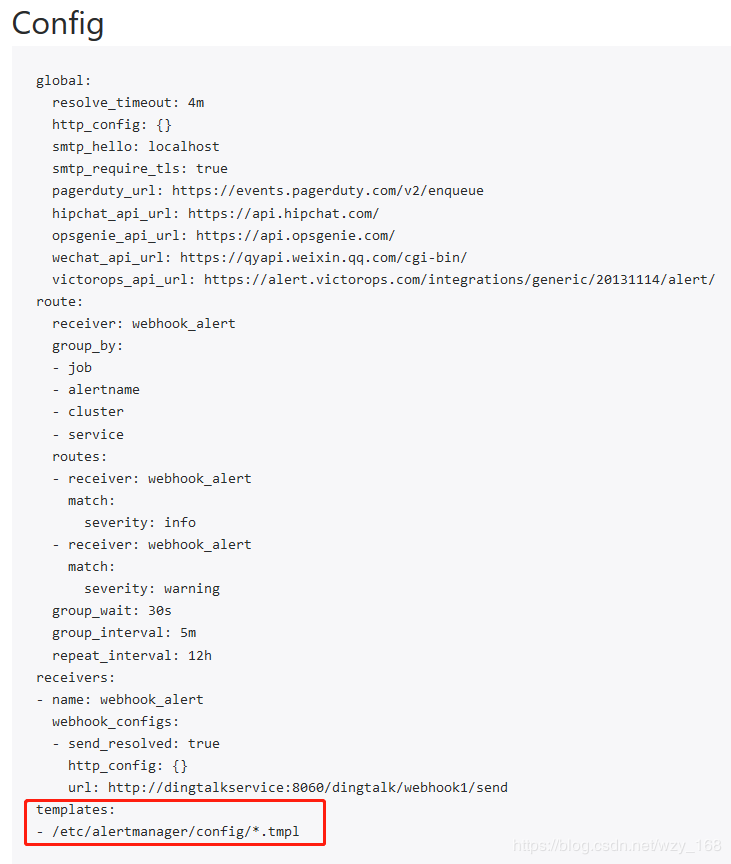

创建一个新的alertmanager.yaml文件,内容

global:

resolve_timeout: 4m

receivers:

- name: webhook_alert

webhook_configs:

- send_resolved: true

url: http://dingtalkservice:8060/dingtalk/webhook1/send

route:

group_by:

- job

- alertname

- cluster

- service

group_interval: 5m

group_wait: 30s

receiver: webhook_alert

repeat_interval: 12h

routes:

- match:

severity: info

receiver: webhook_alert

- match:

severity: warning

receiver: webhook_alert

templates:

- '*.tmpl'

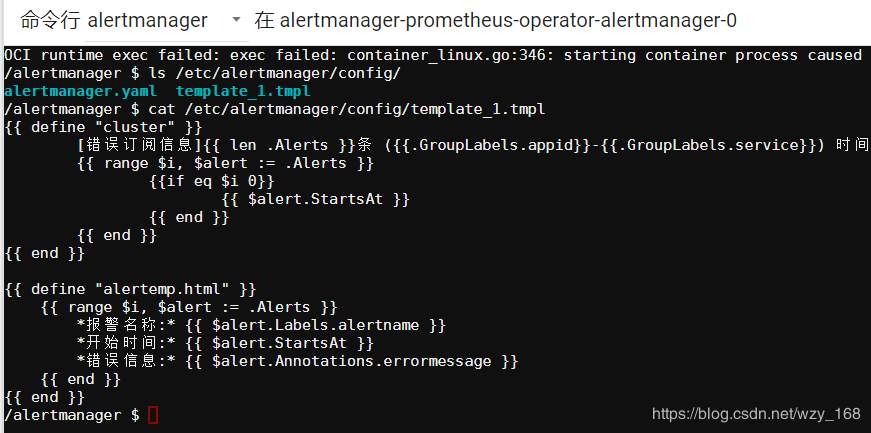

其中我们增加了webhook方式报警,配置最后增加了模板,所以我们还需要创建一个模板,如template_1.tmpl

{{ define "cluster" }}

[错误订阅信息]{{ len .Alerts }}条 ({{.GroupLabels.appid}}-{{.GroupLabels.service}}) 时间

{{ range $i, $alert := .Alerts }}

{{if eq $i 0}}

{{ $alert.StartsAt }}

{{ end }}

{{ end }}

{{ end }}

{{ define "alertemp.html" }}

{{ range $i, $alert := .Alerts }}

*报警名称:* {{ $alert.Labels.alertname }}

*开始时间:* {{ $alert.StartsAt }}

*错误信息:* {{ $alert.Annotations.errormessage }}

{{ end }}

{{ end }}

上述模板参考:http://blog.microservice4.net/2018/12/05/alertmanager/

先删除原来的Secret

kubectl delete secret alertmanager-prometheus-operator-alertmanager -n monitoring

从刚刚文件创建新的Secret

kubectl create secret generic alertmanager-prometheus-operator-alertmanager -n monitoring --from-file=alertmanager.yaml --from-file=template_1.tmpl

创建完之后可以通过log命令查看后台有没有报错。

kubectl log alertmanager-prometheus-operator-alertmanager-0 alertmanager -n monitoring

也可以在Dashboard中查看日志。还可以在Alertmanager容器中查看

![altermanager]()

最后在Alertmanager页面中查看Config是否更新。

![altermanager]()

到此,已经完成Alertmanager默认配置的更新。

以上参考https://www.qikqiak.com/post/prometheus-operator-custom-alert/.

上述配置Webhook使用的钉钉来做通知

webhook通知机制

在Alertmanager中可以使用如下配置定义基于webhook的告警接收器receiver。一个receiver可以对应一组webhook配置。

name:

webhook_configs:

[ - , ... ]

每一项webhook_config的具体配置格式如下:

# Whether or not to notify about resolved alerts.

[ send_resolved: | default = true ]

# The endpoint to send HTTP POST requests to.

url:

# The HTTP client's configuration.

[ http_config: | default = global.http_config ]

send_resolved用于指定是否在告警消除时发送回执消息。url则是用于接收webhook请求的地址。http_configs则是在需要对请求进行SSL配置时使用。

当用户定义webhook用于接收告警信息后,当告警被触发时,Alertmanager会按照以下格式向这些url地址发送HTTP Post请求,请求内容如下:

{

"version": "4",

"groupKey": <string>, // key identifying the group of alerts (e.g. to deduplicate)

"status": "<resolved|firing>",

"receiver": <string>,

"groupLabels": <object>,

"commonLabels": <object>,

"commonAnnotations": <object>,

"externalURL": <string>, // backlink to the Alertmanager.

"alerts": [

{

"labels": <object>,

"annotations": <object>,

"startsAt": "<rfc3339>",

"endsAt": "<rfc3339>"

}

]

}

钉钉机器人

webhook机器人创建成功后,用户就可以使用任何方式向该地址发起HTTP POST请求,即可实现向该群主发送消息。目前自定义机器人支持文本(text),连接(link),markdown三种消息类型。

例如,可以向webhook地址以POST形式发送以下

{

"msgtype": "markdown",

"markdown": {

"title":"Prometheus告警信息",

"text": "#### 监控指标\n" +

"> 监控描述信息\n\n" +

"> ###### 告警时间 \n"

},

"at": {

"atMobiles": [

"156xxxx8827",

"189xxxx8325"

],

"isAtAll": false

}

}

可以使用curl验证钉钉webhook是否能够成功调用:

$ curl -l -H "Content-type: application/json" -X POST -d '{"msgtype": "markdown","markdown": {"title":"Prometheus告警信息","text": "#### 监控指标\n> 监控描述信息\n\n> ###### 告警时间 \n"},"at": {"isAtAll": false}}' https://oapi.dingtalk.com/robot/send?access_token=xxxx

{"errcode":0,"errmsg":"ok"}

如果想把Alertmanager信息转到钉钉上去,需要做一个转换器。

转化器部署:可以在k8s中部署一个Deployment,然后创建一个Service给Alert manager使用。

整个报警链路

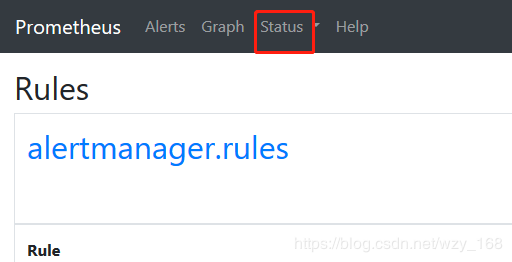

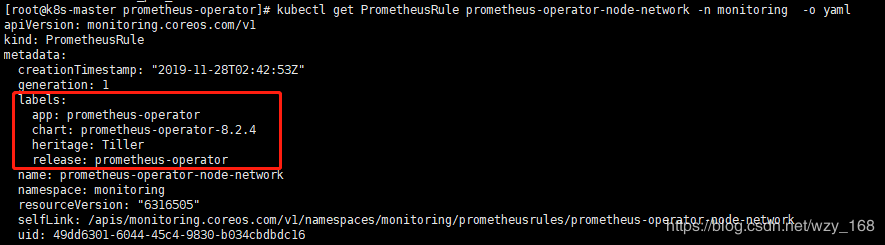

- 首先是Prometheus根据告警规则告警,如果增删改规则参考PrometheusRule

- Prometheus的告警经过Alertmanager静默、抑制、分组等配置到达Alertmanager

- AlterManager通过配置webhook,地址填钉钉转换器的地址。

- 钉钉转换器中webhook地址填写钉钉机器人webhook的地址。

Prometheus Alert 告警状态

这里说明一下 Prometheus Alert 告警状态有三种状态:Inactive、Pending、Firing。

- Inactive:非活动状态,表示正在监控,但是还未有任何警报触发。

- Pending:表示这个警报必须被触发。由于警报可以被分组、压抑/抑制或静默/静音,所以等待验证,一旦所有的验证都通过,则将转到 Firing 状态。

- Firing:将警报发送到 AlertManager,它将按照配置将警报的发送给所有接收者。一旦警报解除,则将状态转到 Inactive,如此循环。

参考链接